Revolutionizing AI - The 2024 Nobel Prize in Physics and the Breakthroughs in Neural Networks | (Fri 04 Oct 2024 02:07)

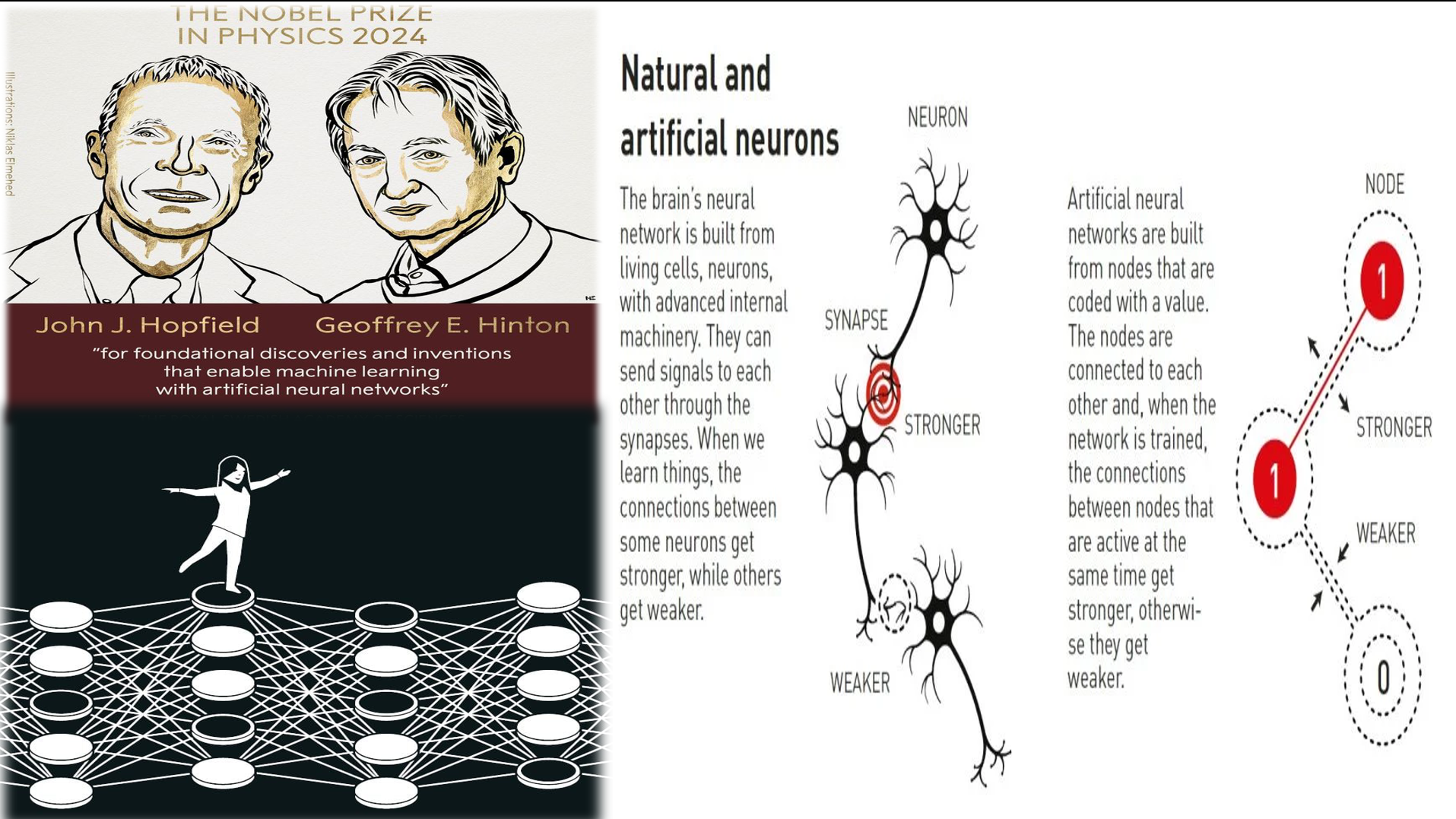

The 2024 Nobel Prize in Physics: A Breakthrough in Artificial Neural Networks

The 2024 Nobel Prize in Physics was awarded to John Hopfield and Geoffrey Hinton for their groundbreaking work in developing artificial neural networks (ANNs) — a foundational technology in modern machine learning and artificial intelligence (AI). Their research has revolutionized data processing and has had a far-reaching impact on fields such as image recognition, natural language processing, and autonomous systems.

What are Artificial Neural Networks (ANNs)?

Artificial Neural Networks are algorithms inspired by the structure and function of the human brain. They consist of interconnected units called nodes or neurons that process information collaboratively.

1. Structure and Function

-

Each node receives inputs, processes them using weights and an activation function, and passes the result to the next layer.

-

These nodes are connected like synapses in a biological brain, forming layers that allow the system to detect patterns and make decisions.

2. Learning Process

-

ANNs learn through a training process where the strengths of connections (weights) are adjusted based on input data and expected outcomes.

-

This process helps the network improve its performance over time.

3. Applications in AI

-

ANNs enable machines to perform human-like tasks such as image and speech recognition, translation, and decision-making.

-

For instance, AI systems can recognize faces or translate languages by learning from massive datasets.

John Hopfield’s Contribution: The Hopfield Network

John Hopfield introduced the Hopfield Network, a type of recurrent neural network that laid the foundation for modern neural computation.

1. Associative Memory

-

Hopfield networks allow machines to recall or reconstruct patterns from partial or noisy inputs, mimicking how humans recognize familiar things even when incomplete.

2. Physics Meets Cognition

-

Hopfield used principles from statistical physics, like atomic spin behavior, to model cognitive processes, creating a bridge between physics and neuroscience.

3. Low-Energy States

-

The network stores information in “low-energy” states. When presented with distorted inputs, it naturally converges toward the nearest known pattern, effectively denoising the data.

4. Hebbian Learning

-

Based on Donald Hebb’s principle: “Neurons that fire together, wire together.” This theory underpins how neural networks adjust connections through repeated co-activation.

Geoffrey Hinton’s Contribution: Boltzmann Machines

Geoffrey Hinton advanced neural network theory by developing the Boltzmann Machine, the first deep-learning model to incorporate probabilistic learning using physics-based methods.

1. Probabilistic Learning

-

Using Boltzmann’s equation, Hinton’s model predicts outcomes by favoring states of lower energy, mirroring natural probabilistic behavior in physics.

2. Visible and Hidden Nodes

-

Boltzmann Machines contain:

-

Visible nodes: Directly interact with input data.

-

Hidden nodes: Interact internally, enabling the system to discover and model hidden patterns.

-

3. Restricted Boltzmann Machines (RBM)

-

Hinton refined his model into the Restricted Boltzmann Machine, where hidden nodes are not connected to each other. This makes training faster and more effective.

-

RBMs paved the way for deep learning, enabling stacked layers of learning in today’s AI models.

Recent Advances in Neural Networks

1. Transformers

-

A powerful architecture using encoder-decoder mechanisms for understanding sequences.

-

Key to breakthroughs in natural language processing (NLP), enabling tools like GPT and BERT.

2. Backpropagation

-

A method for training ANNs by correcting errors and updating weights.

-

Essential for improving model accuracy over time and a core technique in deep learning.

3. Long Short-Term Memory (LSTM)

-

A special type of recurrent neural network designed to remember data over long sequences.

-

Used in tasks like language modeling, speech recognition, and translation.

The Future of AI and Neural Networks

The Nobel recognition of John Hopfield and Geoffrey Hinton highlights the transformative power of artificial neural networks. Their work has laid the foundation for today’s AI revolution—powering everything from autonomous vehicles and medical diagnostics to personal assistants and language models.

As research continues to evolve, the future promises even more remarkable innovations, making machines not only smarter but increasingly intuitive in understanding and interacting with the world.

Physics, Chemistry, Biology and Geography.

Computer Programming, languages & their frameworks.

Economics, Accounts and Management.

Reviewing old and new books.

Ancient, Medieval, Modern, World History.

Indian Constitution, Politics, Policies, etc.

Everything related to International Affairs.

For all humanities topics, except History & Polity.

Anything related to entertainment industry.

Mainly Cricket but other sports too.

CS, IT, Services & Corporate Sector.

Comments

No comments yet. Be the first to comment!

Leave a Comment